Team Skills Mapping & Development Planning

Context

As our design team expanded and the scope of our product work grew, we needed a comprehensive and objective view of our capabilities. I led an initiative to map skills across the entire design team, identify strengths and growth areas, and create a framework for targeted development. We achieved our team’s goal to meet evolving product demands while supporting individual career growth.

Challenge

Team growth and increasing product complexity made it unclear where our skills were concentrated or where gaps existed.

Without this insight, hiring and staffing decisions risked being reactive rather than strategic.

Knowledge and expertise were often siloed, limiting opportunities for cross-training and collaboration.

My Role

Designed and drove the entire process from initial concept through to implementation.

Facilitated team-wide workshops to collaboratively define a skill taxonomy tailored to our needs.

Developed and administered a balanced assessment process combining self-ratings and peer feedback.

Translated raw data into clear visualizations to make patterns and gaps immediately visible.

Partnered with leadership to act on findings and integrate them into staffing, hiring, and development plans.

Process

Defining Skills

Collaborated with the team to identify and define core skill categories such as Research, Design Communication, Visual Design, and Technical Literacy.

Assessment Design

Built a repeatable evaluation process that encouraged honest self-assessment and constructive peer input, fostering shared ownership of growth.

Data Analysis & Visualization

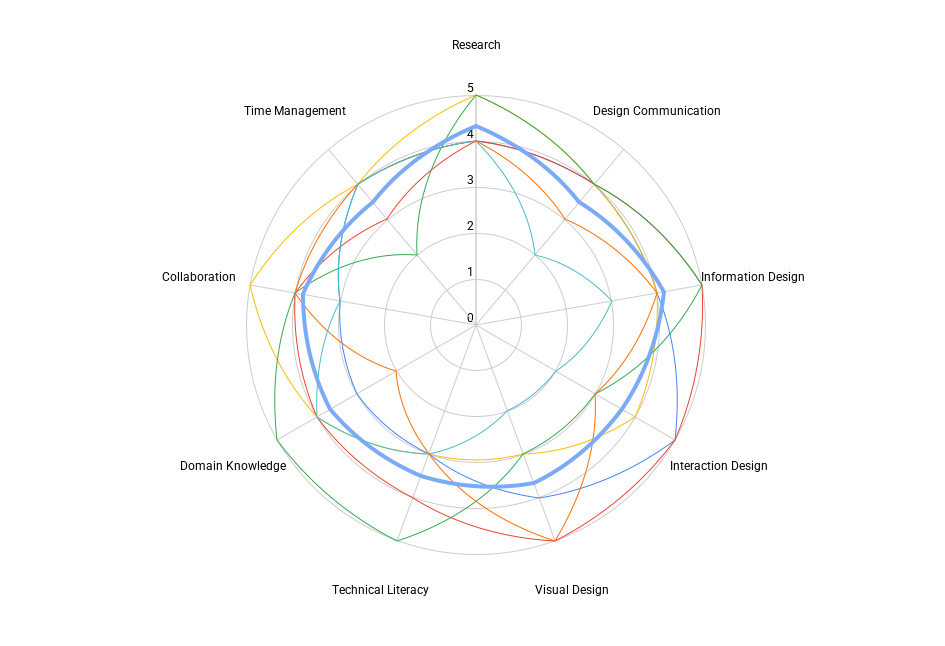

Created radar charts to compare individual and team skill distributions, highlighting areas of strength and opportunities for development.

Action Planning

Used findings to recommend targeted hiring and launch skill-specific training initiatives.

Outcomes

Strategic Hiring

Data informed the recruitment of specialists in critical skill areas, closing key capability gaps.

Focused Development

Increased team self-awareness, fostered peer-to-peer learning, and encouraged proactive skill growth.

Collaborative Growth

Facilitated knowledge sharing and cross-training opportunities, helping individuals identify strengths to build on and areas to develop.

Ongoing Evolution

At the end of 2023, we updated the skill framework to reflect shifts in team scope, product priorities, and technology. We have since completed two full assessment cycles with the updated model, ensuring relevance while maintaining consistency in measurement.

Impact Visualization

April 2021

January 2022

January 2023

April 2021 → January 2022

The first two assessment cycles showed marked improvements in several core areas, including Design Communication, Interaction Design, and Visual Design. Average scores increased across most categories, with more even distribution of strengths across team members, reducing reliance on single individuals for specialized skills.

January 2022 → January 2023

A third cycle confirmed continued growth, particularly in Collaboration, Domain Knowledge, and Technical Literacy. Gains reflected the impact of targeted mentorship pairings and focused training programs. The skill profile became more balanced overall, with fewer extreme highs and lows, supporting greater flexibility in project staffing.

Strategic Outcomes from Data

These visual comparisons guided hiring priorities, shaped our mentorship and training focus, and reinforced the importance of maintaining a living skills framework to adapt to evolving team needs.